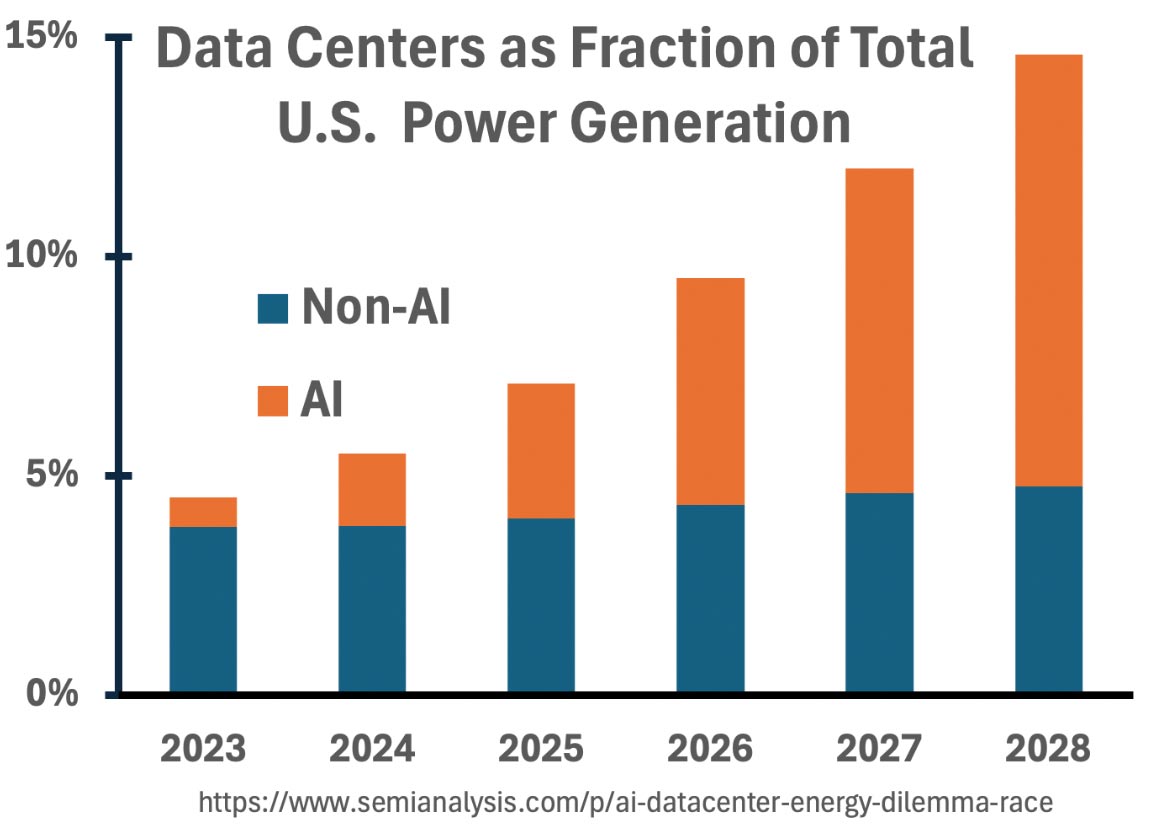

As we aim to advance the study of Safe, Secure, and Trustworthy AI, we must do so in a sustainable and efficient manner. The recent advent of Large Language Models has accelerated the need for the design of efficient and environmentally responsible AI systems. For example, GPT-3, which consumed over 500 tons of carbon during its training process alone (i.e., the equivalent of the average annual consumption of 100 cars). This trend is only accelerating, with just data centers projected to consume as much as 14% of U.S. energy consumption by 2030 (see attached Figure with data from https://www.semianalysis.com/p/ai-datacenter-energy-dilemma-race ). Recent advancements in AI accelerators provide promise of workload acceleration, often touting high FLOP count, large memory bandwidth, or novel dataflow architectures. However, with the plethora of specialized non-GPU accelerators available today, researchers are often left wondering which is the optimal choice for their given workload. This poses a high barrier to entry – as it is often unknown how a given workload will perform on a novel system. A suboptimal selection could induce costly overheads, including a large sustainability footprint that could otherwise be avoided.

In response, we propose an integrated research plan with three vectors—carbon attribution, heterogeneous processor management, heterogeneous model management—that form a comprehensive approach to sustainable computing. First, power and carbon analysis estimates the environmental impact of artificial intelligence (AI), enabling intelligent decision-making when scheduling workloads and allocating resources. Second, provisioning heterogeneous processors allows a system to leverage diverse capabilities, matching computational tasks to the appropriate hardware to optimize performance and energy efficiency. Third, training heterogeneous AI models allows a system to perform computation judiciously, matching inference queries to the appropriate model to optimize accuracy and resource efficiency.

This project is a supplement to Carbon Connect, our NSF Expedition in Computing that is (i) developing carbon accounting strategies for computing technology; (ii) mitigating embodied carbon by re-thinking hardware design; (iii) reducing operational carbon by re-thinking systems management; and (iv) balancing the complex interplay between embodied versus operational carbon. This supplement through NAIRR will allow for the exploration of the performance, efficiency, and sustainability of diverse AI architectures and hardware platforms.