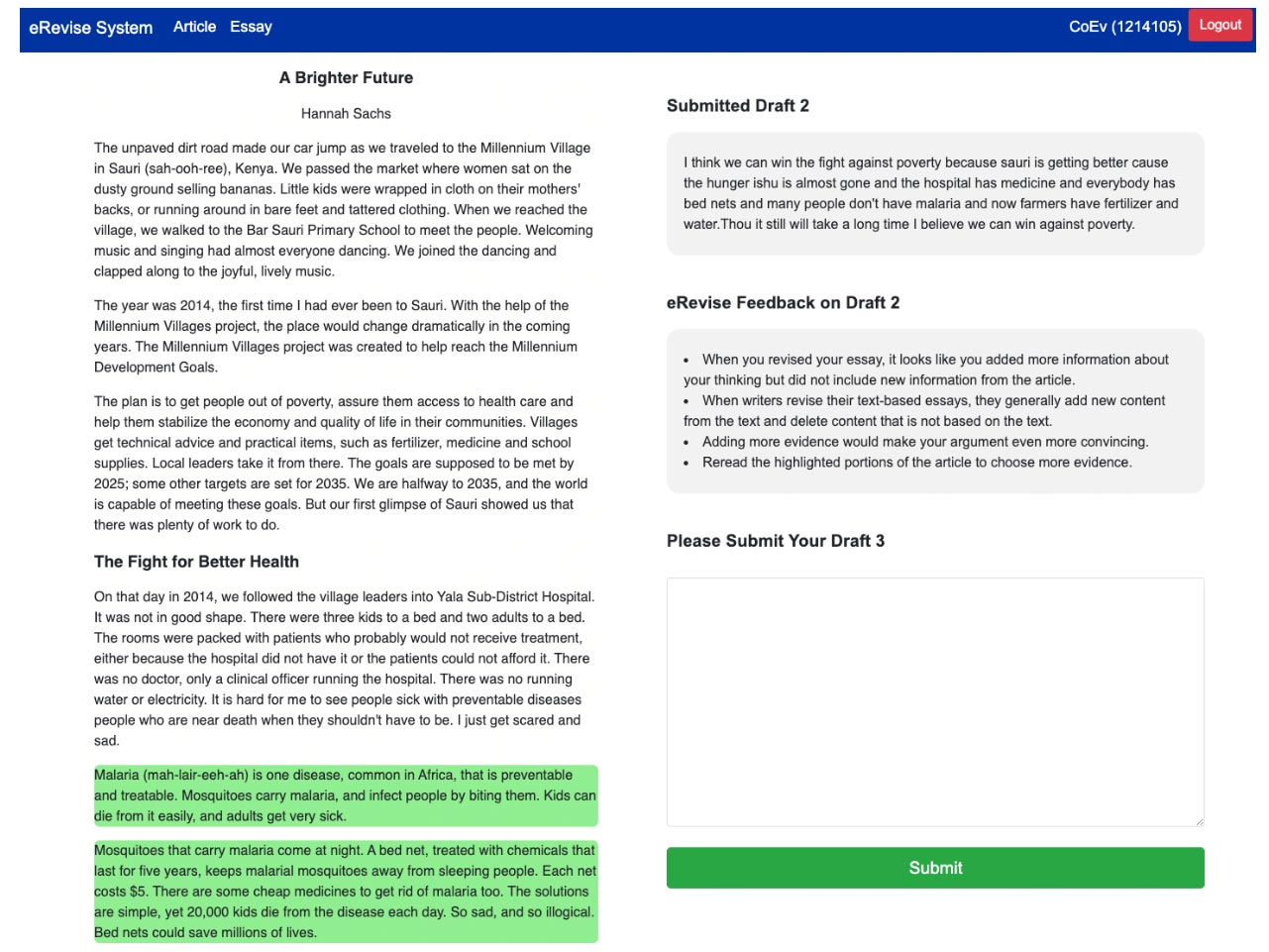

Recognizing the importance of argumentative writing, multiple educational technologies driven by natural language processing (NLP) have been developed to support students and teachers in these processes. However, evidence is modest that such systems improve writing skills. One reason for this is that many students lack the strategic knowledge and skills needed to revise their essays even after receiving writing feedback. The research team is thus developing the eRevise+RF system, which leverages NLP to provide students with formative feedback on the quality of their revisions. The team is 1) developing and establishing the reliability and validity of new measures of revision quality in response to formative feedback on evidence use, 2) using NLP to automate the scoring of revisions using these measures, 3) providing formative feedback to students based on the automated revision scoring, and 4) evaluating the utility of this feedback in improving student writing and revision in classroom settings. The team hypothesizes that such a system will improve students’ implementation of feedback messages on text-based argument writing, leading toward more successful revision and, ultimately, more successful writing.

Development of Natural Language Processing Techniques to Improve Students' Revision of Evidence Use in Argument Writing (Supplement)

Project leads, key team members

Diane J Litman (Principal Investigator, University of Pittsburgh), Richard Correnti (Co-Principal Investigator, University of Pittsburgh), Lindsay Clare Matsumura (Co-Principal Investigator, University of Pittsburgh), Elaine Wang (Principal Investigator, RAND Corporation)

Broader Impacts

Automatic writing evaluation technology has the potential to prepare a new generation of students for productively writing and revising argumentative essays, a skill they will need in order to be prepared for the educational and workplace settings of the future. For learning researchers and educators, the revision quality measures will provide detailed information about how students implement formative feedback. For technology researchers, the automated revision scoring will extend prior writing analysis research in novel ways, e.g., by assessing the quality of revisions between essay drafts and by incorporating alignment with prior formative feedback into the assessment.

More information

Learn more about how the Development of Natural Language Processing Techniques to Improve Students' Revision of Evidence Use in Argument Writing project can meet your needs by contacting our team directly at dlitman@Pitt.edu. Visit our website to learn more at https://sites.google.com/view/erevise/home.

This work is supported by supplemental funding to National Science Foundation Grant No. (#2202347).