The Partnership to Advance Throughput Computing (PATh) is the NSF’s premier investment in throughput computing. PATh innovates in technologies, developing the HTCondor Software Suite (HTCSS), and uses a translational computer science approach to move new ideas into the S&E community. PATh will launch a new collaboration with a group of domain scientists and ML researchers to run a pathfinder project profiling the effects of throughput training and inference on distributed, heterogeneous capacity. The goals of this activity will be threefold:

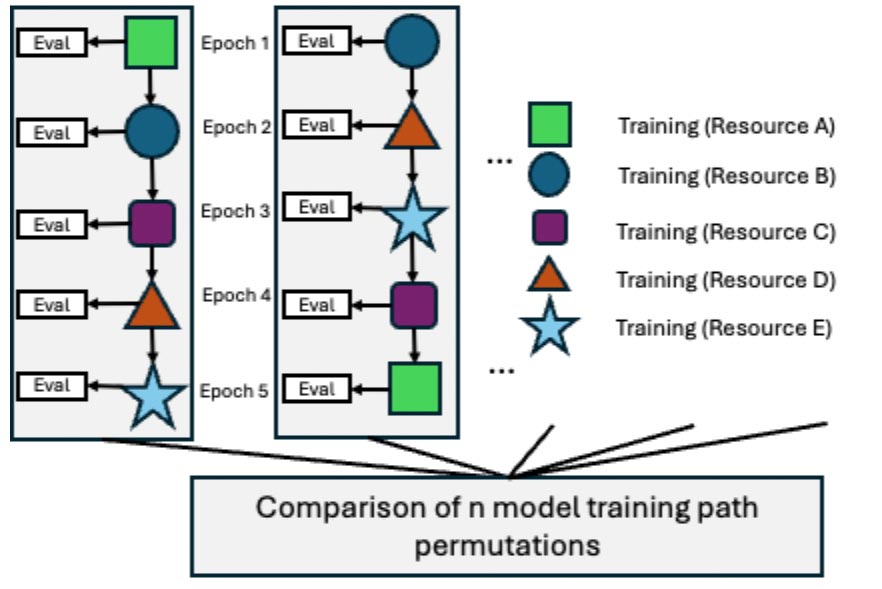

- Characterize the impact of training ensembles across heterogeneous resources, as opposed to the traditional approach of “single homogeneous cluster”.

- Use the workloads developed in (1) to improve capabilities and services, reducing the barrier of entry for new ML researchers to use the PATh services to train ensembles with capacity powered by the NAIRR pilot.

- Demonstrate running single workloads managed by a single Access Point effectively across as many of the NAIRR pilot resources as possible.